IBM recently held IBM Think 2018, a first-of-its-kind conference dedicated to the technological innovations and research going on inside of IBM and the world at large. The multi-day conference covered everything from the rise of big data, to blockchain and its use outside of cryptocurrency, and even quantum computing.

No conference discussing technology would be complete without mention of artificial intelligence and IBM did not disappoint. For many, recruitment process outsourcing and talent acquisition experts out there, AI is still a big wild card when it comes to the future of the industry. Industry talent leaders such as Tricia Tamkin, Partner at Moore eSSentials, a recruiter and researcher training and consultation organization, believe that AI will ultimately prove a benevolent and useful tool in the recruiter’s arsenal once we iron out any problems currently plaguing the implementation.

According to Tamkin, rather than replace the entirety of the recruiting force, “it’s far more likely that some of the mundane tasks that are required when hiring will be eliminated.”

One of these problems is on the radar of researchers at IBM. Francesca Rossi, IBM researcher on AI ethics and full professor of computer science at University of Padova provided a glimpse into a major challenge currently facing the development of artificial intelligence: a problem of bias.

In the presentation “IBM Research Science Slam: Unveiling 5 Breakthrough Technologies That Will Change the World,” Rossi explains that 30 years ago researchers were curious with how AI could be achieved. Back then, there was no discussion on how AI would actually affect our lives.

Now that AI is a reality, conversations about how to responsibly use AI in our lives is finally taking place, and bias is a chief concern for the future of AI as a helpful part of our lives.

A Bias Problem

We tend to think of a bias as a problem stemming from and concerning human interaction. Unconscious bias for example has long been an enemy of diversity in the workplace, resulting in a far more homogenous work environment and limiting opportunities for others.

AI, in theory, could choose the best candidate for the job without letting biases such as background or skin color affect the decision. The problem is that AI, no matter how intelligent, is programmed by humans, Rossi explains, and most humans have some level of bias.

“AI will try to generalize its data to deal with new situations. Think of Google image identification. If samples aren’t diverse enough or representative enough, the AI will have problems generalizing to other pictures.”

What’s Wrong With Bias

The most common example of bias in the talent acquisition industry is found in candidate selection. Numerous studies have shown certain candidates being favored over others based on characteristics such as ethnicity, skin color, or education.

“We’re wired to like people like us, and wired to trust people we like,” says Kim Ruyle, President of Inventive Talent Consulting. Ruyle discussed how the brain affects our decision making skills in his presentation “Finding Authentic High Potential Talent in the Crowd: Where’s Waldo?”

“In turn, we often are drawn to hire people that are similar to us in someway,” he notes.

Gender is another frequent bias, one that many corporations have struggled with in recent years. Google has faced multiple lawsuits claiming that the organization discriminates against women when it comes to wages (and has even received a recent complaint that it discriminates against white, conservative men).

Bias has largely led to the pay disparity that exists between men and women, as well as those between different ethnicities, for the same type of position, despite similar educational background or experience in the field.

In the end, recruiting decisions that are influenced by bias may even pass over a top-level candidate, all stemming from a choice made based on factors unrelated to the job requirements.

What Can We Do About It?

According to Rossi, eliminating bias from our AI systems will take the same kind of effort as eliminating bias from our in person interactions: inclusion and diversity.

“Only a very diverse and inclusive approach can help shape AI in a way that is trustworthy and helpful,” explains Rossi. That means multi-gender, multi-cultural, and multi-stakeholder, she adds.

Janice Omadeke, CEO and Founder of The Mentor Method, agrees. “Your HR team may have a challenging time identifying areas of bias since they’re already immersed in the existing company culture,” she explains. In such cases, it helps to reach out to resources that can assess your company’s hiring practices and culture, and make suggestions to help improve opportunities for diverse hiring.

IBM also performed and produced research on how to detect and mitigate bias when collecting data, in addition to teaching their own AI systems of how to identify instances of bias in data sets, suggesting that technology will one day help remove instances of negative bias on its own, without human supervision.

We’re not quite there yet, warns Rossi. But over the next 5 years, bias in AI will be tamable and eliminated by those who care about the responsible use of AI. These AI systems, and the companies that leverage them, will be the only ones trusted by consumers and businesses.

In next 5 years, bias in AI will be tamed and eliminated by people who care about the responsible use of AI. Only those systems that don’t use AI will be trusted and adopted in the long term. And according to Rossi, researching AI in bias helps us as well:

“The more we learn and understand about AI bias, the more we recognize our own bias. The more we inject anti-bias into AI, the more the AI system can stop us from continuing to be biased…To build trust between humans and machines, we may learn how to improve more than just AI. We might also improve ourselves.”

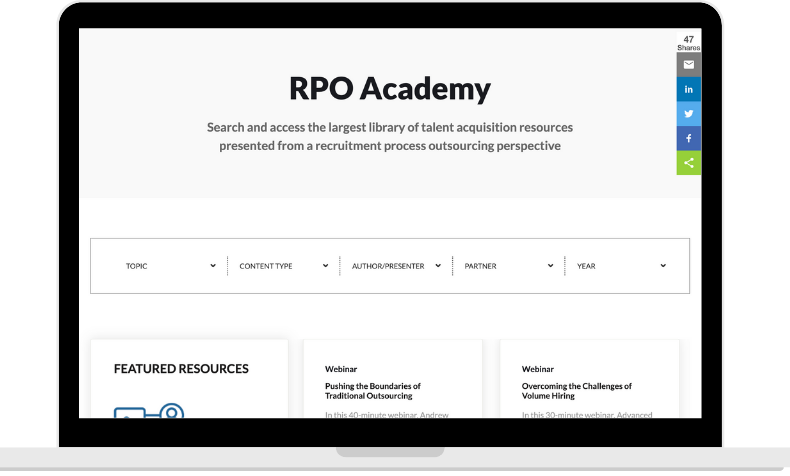

Stay tuned for future articles from the Recruitment Process Outsource Association as we provider coverage on improving diversity and eliminating bias while choosing the right candidate for the job.