Having been at the intersection of AI and talent for over a decade, I've witnessed firsthand the transformative potential of artificial intelligence in the recruiting space. However, I have also seen how AI deployed irresponsibly can go wrong, affecting people’s careers and livelihoods. With great power comes great responsibility. Today, I want to share my thoughts on the critical importance of AI governance in recruiting and how organizations can ensure compliant AI adoption.

The Early Automobile Analogy

To highlight the importance of governance, I often draw an analogy to another transformative technology - the motor vehicle. When automobiles first came out, there were no sensors, dashboards, rearview mirrors, or check engine lights. People initially drove so slowly out of safety concerns that there was some thought that adoption would not take off. Over time, a regulatory apparatus (driver's license, speed limits, and law enforcement) combined with safety technology (seatbelts, airbags, and child safety locks) built trust to such an extent that we now drive 70mph without concerns. Widespread automobile adoption required bridging the “trust gap.”

Similarly, for AI to be impactful and meaningfully adopted, we need to build trust in AI systems, which will require a combination of smart regulations and technology.

New regulations like the EU AI Act and Colorado AI Act SB 205 are designed to add essential safety features to the rapidly evolving AI industry. Just as dashboards provide vital information and seatbelts protect drivers and passengers, these regulations safeguard businesses and consumers. They compel AI developers to implement critical protections against bias and unfairness, guiding the industry toward more responsible and ethical AI solutions.

These legislative measures build a safer AI infrastructure that supports faster, more reliable innovation in the long run.

Watch Guru Sethupathy speak about AI governance in this 30-minute informative webinar

The Need For AI Governance in Recruiting

AI recruiting has become a focal point in the AI ethics conversation due to its profound impact on individuals' livelihoods. As AI systems increasingly influence hiring decisions, they hold the power to shape workforce diversity, economic opportunities, and social mobility on a large scale. In the world of recruiting, AI is everywhere. AI-powered tools are revolutionizing talent acquisition, transforming everything from resume screening and candidate matching to interviewing and chatbot interactions.

For that reason, many regulations, including the EU AI Act, Colorado AI Act SB 205, NYC LL144, and the OMB Policy, consider AI systems that have the potential to impact employment as high-risk.

AI in recruiting touches on sensitive areas such as equal opportunity, fairness, and potential bias in decision-making processes. With emerging regulations, recruiters are at the forefront of navigating the complex intersection of technology, ethics, and compliance. This heightened scrutiny makes recruiting a critical testing ground for responsible AI practices, setting precedents that could influence AI governance across other industries and functions.

Best Practices for AI Governance in Recruiting

Based on our experience at FairNow and insights from HR leaders, I recommend implementing a comprehensive AI governance framework.

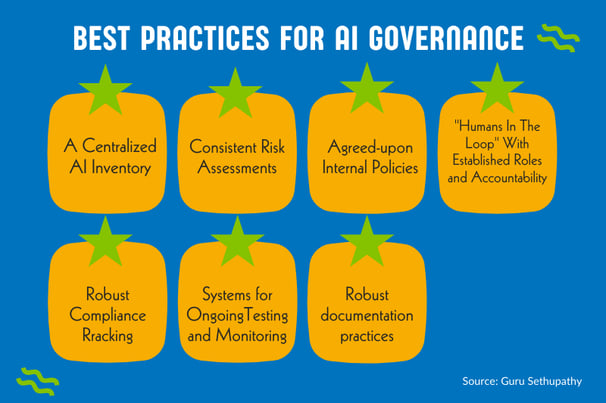

A strong AI governance framework includes seven key components:

- A centralized AI inventory: Teams can proactively identify potential issues by maintaining a comprehensive inventory of all AI applications. Currently, many teams don’t even know how many models they are running, which exposes them to a number of significant business risks, ranging from compliance violations to security vulnerabilities. This inventory should detail each tool's purpose, data usage, risks, and compliance status. A centralized overview enhances governance by ensuring all AI tools are accounted for and monitored.

- Consistent risk assessments: Regular risk assessments of AI applications helps proactively identify potential issues. This practice can uncover and mitigate various business risks, including compliance violations and security vulnerabilities.

- Agreed-upon internal policies: Agreed-upon internal policies around transparency, remediation, documentation, and AI ethics help ensure that your AI decision-making processes are forthright and explainable, both to internal stakeholders and candidates.

- "Humans In The Loop" with established roles and accountability: Especially in high-risk use cases such as recruitment, AI models require human oversight with clearly defined roles and accountability. The most effective organizations have buy-in from leadership, data privacy, compliance, legal, risk management, procurement, and HR teams. These teams collaborate to determine their risk exposure and appetite. Well-defined accountability measures ensure responsibility for AI decisions, a vital component of good governance.

- Robust compliance tracking: Teams should implement comprehensive systems to track compliance with rapidly evolving AI regulations. This includes both existing laws like NYC’s Local Law 144 and emerging AI-specific regulations like the EU AI Act.

- Systems for ongoing testing and monitoring: Testing and monitoring should be thought of as an ongoing process rather than a one-time task. Organizations must ensure that their AI systems do not perpetuate bias, especially in systems that make employment decisions, such as AEDTs. Teams that prioritize continuous testing and monitoring can detect and rectify anomalies, biases, and performance issues before they escalate.

- Robust documentation practices: Maintaining detailed records of these efforts not only aids in regulatory compliance but also supports the continuous improvement of AI governance within the organization.

Overcoming Common Challenges

As the industry evolves at a breakneck pace, implementing robust AI governance in recruiting isn't without its challenges. Some common hurdles I’ve seen include:

- Lack of Internal Expertise: Many recruiting functions struggle with a knowledge gap regarding AI and governance. The specialized nature of AI technology can make it difficult to oversee and manage AI systems effectively without domain expertise.

- Complexity of Vendor-Built Systems: The use of third-party AI vendors introduces additional layers of complexity. Recruiting teams often deal with 'black box' systems where the inner workings are opaque. This lack of transparency can make it challenging to assess and mitigate risks associated with these vendor-built AI tools.

If you’ve been tasked with evaluating a third-party AI vendor, our team has compiled a helpful checklist of 12 questions to ask that will help you overcome challenges. The checklist includes the answers you should push for. We’ve hand-selected these questions to help you understand the ethical frameworks your vendors employ. We wanted to take the guesswork out of vendor evaluation so you can feel compliant and confident. - Lack of Centralization and Documentation: Organizations frequently lack a comprehensive inventory of the AI models they're using across their recruiting processes. This decentralized approach makes it difficult to track, monitor, and govern AI applications effectively. Without proper documentation, organizations risk overlooking potential compliance issues or inconsistencies in their AI use.

- Rapidly Changing Regulatory Landscape: The pace of AI regulation is accelerating, with new laws and guidelines emerging every month. Many organizations struggle to keep up with these rapid changes, leaving them at risk of non-compliance. The challenge lies not just in understanding these regulations but in quickly adapting AI systems and processes to meet new requirements.

Strategies to Overcome AI Governance Challenges

While governance can seem daunting, it doesn't have to be difficult. Based on what we have seen and implemented over the last decade in highly regulated industries, we recommend the following strategies:

- Invest in AI Literacy Programs: Develop comprehensive training initiatives to bridge the knowledge gap within your recruiting teams. These programs should cover the basics of AI functionality, potential biases in AI systems, and the principles of ethical AI use in hiring. By empowering your team with this knowledge, you're equipping them to be active participants in AI governance rather than passive consumers of AI tools.

- Demand Transparency from AI Vendors: When working with third-party AI vendors, adopt a rigorous approach to transparency. Request detailed information about how their AI models work, what data they use, and how they mitigate bias.

- Leverage technology for simplification, centralization, and documentation: Consider utilizing an AI governance platform to centralize and operationalize your AI governance processes. Tools like FairNow are designed specifically to assist in implementing and maintaining effective AI governance programs.

- Utilize an AI regulatory compliance tool: To ensure compliant AI adoption in the rapidly evolving landscape of AI regulations, AI regulatory compliance tools ensure you meet legal requirements, safeguard your reputation, and reduce financial risk. These tools are updated regularly as regulations and standards emerge.

With these elements in place, you can leverage AI's power in recruiting while protecting your business against legal, reputational, and ethical risks.

The Path Forward

As we continue to harness the power of AI in recruiting, let's remember that governance isn't about stifling innovation. Rather, it's about creating a framework that allows us to innovate responsibly and sustainably. By prioritizing fairness, transparency, and compliance, we can build AI systems that not only enhance our recruiting processes but also earn the trust of our candidates and stakeholders.

At FairNow, we're committed to helping organizations navigate this complex landscape. We believe that with the right governance practices in place, AI can truly revolutionize recruiting for the better, creating more diverse, inclusive, and effective workplaces.

Let's embrace AI governance not as a burden but as an opportunity to lead the way in ethical and effective AI adoption in recruiting. By doing so, we're not just filling positions - we're shaping the future of work itself.

About the author: Guru Sethupathy has dedicated nearly two decades to understanding the impact of powerful technologies, such as AI, on business value, risks, and the workforce. He has written research papers on bias in algorithmic systems and the implications of AI technology on jobs. At McKinsey, he advised Fortune 100 leaders on harnessing the power of analytics and AI while managing risks. As a senior executive at Capital One, he built the People Analytics, Technology, and Strategy function, leading both AI innovations as well as AI risk management in HR. Most recently, Guru founded a new venture, FairNow. FairNow’s AI governance software reflects Guru’s commitment to helping organizations maximize the potential of AI while managing risks through good governance. When he’s not thinking about AI governance, you can find him on the tennis court, just narrowly escaping defeat at the hands of his two daughters. Guru has a BS in Computer Science from Stanford and a PhD in Economics from Columbia University.

.jpg)